Last updated: 27.06.2025

One of the very powerful features of FortiGate hardware appliances is the hardware acceleration chipset included in the hardware platform. This allows to forward traffic in specific situations directly from the incoming interface to the outgoing interface without passing the CPU of the system. This can safe a huge amount of system load on your FortiGate.

In most cases, hardware acceleration is working flawlessly. But in some very rare cases, hardware acceleration may cause problems. Or the hardware acceleration is not working at all and the packets have to be handled by the CPU of your FortiGate.

This guide will lead you through the important troubleshooting steps.

We cover FortiOS version 7.4 in this guide, but most of the information in this article is working for other FortiOS versions too. But there may be a chance that some CLI commands have changed or got removed.

We are using the terms CP for content processor, NP for network processor and SoC for system on a chip. A detailed description can be found in the hardware acceleration guide referenced at the end of this blog article.

Step 1: Are you using a VM platform?

You can see what type of hardware platform you are using by executing the CLI command:

get hardware status

Any kind of VM platform is not supporting hardware acceleration at all.

Although there are a few virtual offloading and acceleration techniques through DPDK and vNP on the KVM platform, we will focus on hardware based acceleration through the Fortigate NPs, CPs and SoCs in this guide.

Step 2: Are you using software switches?

Traffic passing through software switches is not being offloaded to the hardware chipsets.

You can see, if you have configured any software-switches by executing the CLI command on your FortiGate:

show system interface | grep "type switch" -f

A possible solution for some setups may be to replace the software switches with hardware switches. Be aware, that some setups that are using tunneled wifi connections into the software-switch or VXLAN based setups can not be changed to hardware switches, since hardware switches do not support CAPWAP or VXLAN. There may be more limitations not mentioned here. So please ensure first, if in your case this transition from software to hardware switches is possible.

Important note: NP 7 hardware chipsets are capable to offload software switch based sessions (including VXLAN and other supported protocols), but only if the “intra-switch-policy” setting is set to “explicit”. As soon as the intra-switch-policy is set to explicit, the FortiGate creates sessions for all traffic going through a software switch. As soon as a session is created, the traffic is visible in the session table and in the flowtrace utility. Furthermore, since now a session is available in the session table, the sessions can now be offloaded to the NP7 chipset.

Step 3: Are you using PPPoE interfaces?

With the following CLI command you can check if you are using PPPoE interfaces which cannot be offloaded as well:

show system interface | grep "mode pppoe" -f

Please note, that the “modem” interface is a FortiOS default value that in most cases is not in use anywhere.

Any kind of PPPoE interface and all subordinate interfaces like VLANs on PPPoE Interfaces are not supporting hardware acceleration at all.

Step 4: Are you using software-based inter-VDOM links?

There are two different types of inter-VDOM links: NP accelerated links and software based links. Software based VDOM-links can not be offloaded to a network processor (NP) and therefore are not accelerated. NP based inter-VDOM links are supported on all models that have a NP unit inside. There is one link available per every built in NP chipset. There are a lot of additional informations located under https://docs.fortinet.com/document/fortigate/6.4.0/hardware-acceleration/851990/configuring-inter-vdom-link-acceleration-with-np6-processors

Step 5: Is NP or CP acceleration mode set to “none”?

If you have set the NP- or CP acceleration to “none” inside your IPS Settings, the IPS inspection will not being offloaded to the NP or CP chipset of your hardware.

config ips global

set np-accel-mode none

set cp-accel-mode none

endSome additional global settings can be made under config system npu. Lets mention some examples here:

config system npu

set fastpath [disable|enable]

set capwap-offload [enable|disable]

set sw-np-bandwidth [0G|2G|…]

endYou can find more information for all those settings in the CLI Guide.

To check if these features are enabled on your hardware, use the following command:

diagnose npu <chipset name> npu-feature as example: diagnose npu np6lite npu-feature

Step 6: Is your session handled from a session-helper or ALG?

Sessions, that require FortiOS session-helpers or ALGs, can not be offloaded. For example, FTP sessions can not be offloaded to NP processors because FTP sessions use the FTP session helper. Also, there is a DNS session-helper on the FortiGate which prevents offloading of DNS traffic as further example. For this case, Fortinet has published a dedicated KB article.

Step 7: (If IPSec is used) Did you disable hardware offloading in the IPSec tunnel?

You can disable NP offloading for single IPSec tunnels with the following configuration setting:

config vpn ipsec phase1-interface

edit <p1-name>

set npu-offload disable

end

endYou should use this setting very carefully since it can increase the system load a lot when NP offloading is disabled. Our recommendation is to disable NP offloading for testing purposes only and enable it again when finished testing.

Please note, that the NP acceleration is also disabled, if you encapsulate the ESP traffic in TCP packets:

config vpn ipsec phase1-interface

edit <name>

set transport tcp

next

end

Step 8: (If IPSec is used) Is your NP supporting the chosen algorithms?

Configure your tunnels and send some data through them. Then, execute the command

diagnose vpn ipsec status

on the CLI of your FortiGate and check, which chipset is handling your crypto operations. In the best case, all operations can be handled from your hardware chipsets. Otherwise, you can see some counters that are not Null under “SOFTWARE”.

You can fix this, by simply choosing other algorithms.

You can also check the offloading status per tunnel using the following command:

diagnose vpn tunnel list name TunnelName

Look for the npu_flag there.

00 = Both IPsec SAs loaded to the kernel

01 = Outbound IPsec SA copied to NPU

02 = Inbound IPsec SA copied to NPU

03 = Both outbound and inbound IPsec SA copied to NPU

20 = Unsupported cipher or HMAC, IPsec SA cannot be offloaded

Step 9: Is auto-asic-offload or np-acceleration disabled on the firewall policy?

You can disable the “auto-asic-offload” feature on a “per-policy” basis on the FortiGate.

config firewall policy

edit <fw-policy-id>

set auto-asic-offload disable

set np-acceleration disable

end

endYou should use this setting very carefully since it can increase the system load a lot when auto-asic-offloading or NP offloading is disabled. Our recommendation is to disable offloading for testing purposes only and enable it again when finished testing.

Step 10: Are passive health-checks enabled in SD-WAN?

On traffic, that is subject to passive SD-WAN health monitoring, hardware acceleration is disabled.

Following you can find a CLI configuration example for passive monitoring in SD-WAN. Note that, in addition to configuring the health check in passive mode (set detect-mode passive), you must also enable passive-wan-health-measurement on the firewall policies that accept traffic for the monitoring member. When you enable passive-wan-health-measurement on a policy, auto-asic-offload is also automatically disabled.

config system sdwan

config health-check

edit "Passive"

set detect-mode passive

next

end

end

config firewall policy

edit 14

set passive-wan-health-measurement enable

next

end

# show full firewall policy 14 | grep auto

set auto-asic-offload disable

Step 11: Is the firewall policy inspection mode set to proxy based?

Proxy based inspection profiles can not be hardware accelerated. Therefore, all proxy based inspection is being handled from a process running on the CPU.

You can find any proxy-based firewall policies with the following CLI command:

sh | grep "inspection-mode proxy" -f

Step 12: Is sFlow sampling enabled on the Interface?

Configuring sFlow on any interface disables all NP6, NP6XLite, or NP6Lite offloading for all traffic on that interface.

Step 13: Is “strict-header-checking” enabled?

Enabling the strict header check disables all hardware acceleration. This behaviour is documented in this KB here.

sh | grep "check-protocol-header strict" -f

Step 14: Is the traffing going over DTLS?

DTLS can not be offloaded to the FortiGate hardware.

You may consider to prefer IPSec VPN for Access Point connection instead of using DTLS.

Step 15: Do you have DoS Policies in place?

Hardware Acceleration for DoS Policies needs to be enabled manually. More information is available under: https://docs.fortinet.com/document/fortigate/7.6.3/hardware-acceleration/599810/dos-policy-hardware-acceleration

Step 16: Device Identification has not determined the device yet

As long as the MAC address of the device has not been identified, the FortiGate will not offload the traffic and instead process it for device identification.

Diagnose commands

How you can find out which ports are connected to which NP chip:

diagnose npu <chipset name> port-list diagnose npu np6lite port-list

You can also print out some interesting session statistics for NP sessions with the command:

diagnose npu <chipset name> session-stats diagnose npu np6lite session-stats

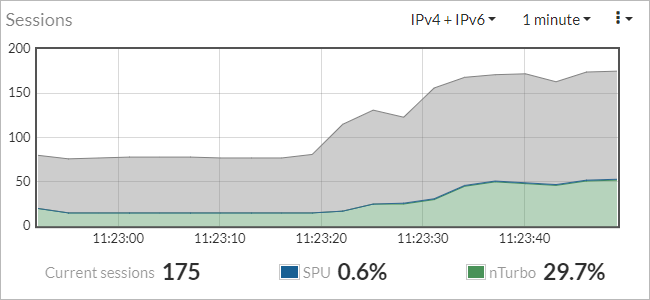

How many Sessions are offloaded to the SPU or nTurbo?

There is a widget called “Sessions” on the FortiGate dashboard. In this widget, you can see how many sessions are opened at the moment and how many of those sessions are offloaded onto the SPU or nTurbo hardware:

More Information to this topic is documented in the hardware acceleration guide.

Interesting information sources

The best information source for all your hardware acceleration questions is the “parallel path processing guide” from Fortinet. This guide is available for your specific FortiOS version and hardware platform model.

Further Articles:

Great article !

Another thing that I’ve found out the hard way. If you have a sflow-sampler under interface it will also disable hardware acceleration

For example:

edit “external”

set ip 1.2.3.4 255.255.255.0

set vlanforward enable

set sflow-sampler enable

set sample-rate 250

set polling-interval 30

Hello Dominique

Thank you very much for your comment on this topic.

Yes, you are right. According to https://docs.fortinet.com/document/fortigate/7.0.0/hardware-acceleration/631057/sflow-and-netflow-and-hardware-acceleration hardware acceleration is being disabled for all affected sessions.

Thank you very much for sharing this very interesting finding. I take the chance and will add this into our post shortly.

Best regards,

BOLL Engineering Tech Team

PS: You have set the “vlanforward” configuration enabled on that interface… We see this a lot on configurations that have been upgraded several times since FortiOS 6.0 or before. Personally, I disable this setting on all interfaces in NAT mode vdoms. Have a look into https://community.fortinet.com/t5/FortiGate/Technical-Note-vlanforward-interface-parameter/ta-p/198171?externalID=FD39910 for further informations on that. I thought to tell you just by the way, since it’s very interesting too…